AI, IoT, M2M, Big Data – The Alphabet Soup of Technology Jargon You Need to Understand (Part 1 of 2)

In 1984 I was seventeen years old and working as an usher in a movie theater when the science fiction thriller The Terminator was released. It was a surprise hit, and I must have seen the movie a couple of dozen times. In case you are not familiar with the movie, Arnold Schwarzenegger plays a human-like cyborg, a Terminator, sent from the future with a mission to kill Sarah Connor, the mother of the future resistance leader that is fighting the Terminator’s artificial intelligence master, Skynet. Aside from the obvious standout qualities of Schwarzenegger’s physique (a former Mr. Universe and Mr. Olympia) and the incredible strength demonstrated by the cyborg, the Terminator looks and even acts somewhat human. To remind the audience that the Terminator is actually a very sophisticated computer, director James Cameron sometimes displays the action from the perspective of the Terminator.

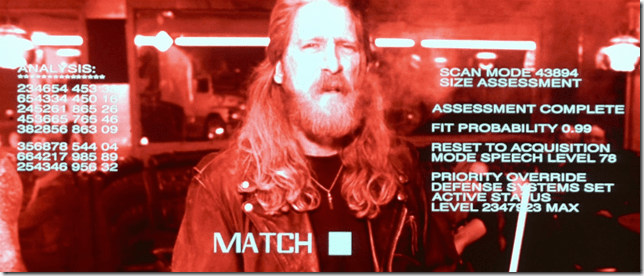

In these “look through” scenes, the audience is presented with a screen that is apparently the field of vision of the Terminator. The film color quality is replaced with mostly red, white and black imagery. Superimposed on the imagery is a bunch of scrolling text gibberish and some highlighted, flashing square boxes to call attention to certain data elements the Terminator may be analyzing – a person’s body size for suitable clothing, weapons in the hands of potential antagonists that must be foiled, etc. Of course, if the Terminator was really a sophisticated computer cyborg, there would not be an internal display barfing computer gibberish onto a screen in a manner that was readable by humans. Computers do not need human-readable text to operate on data the way humans need it. The computer would simply be ingesting external data via the cyborg’s camera eyes and his microphone ears along with any other external sensors for temperature, pressure, odor, and what not. Based on this observed data, the Terminator would be making judgments and taking actions that would have a high probability of creating a path to accomplish the mission – the termination of Sarah Connor. All of this would be happening without a human readable screen display.

Why am I talking about The Terminator? Why is the detail of the Terminator’s view of the world as depicted by the movie director important? I am talking about The Terminator to illustrate the point that artificial intelligence (AI), the Internet of things (IoT), big data, and all of the other alphabet soup puked up on a daily basis by technology media and vendors hyping their products is generally nothing more than the collective, gradual evolution of computers. In 1984, James Cameron could imagine a computer that understands and speaks natural language, sees real-time imagery, reacts to its environment, and takes actions to accomplish the mission. To portray the Terminator as a sophisticated AI being, Cameron showed the audience a visual model that generally represented what computers looked like to the masses in 1984 – a somewhat low-resolution screen with digitized text scrolling on it with an occasional selection option that would become highlighted if you tabbed a cursor to it (remember, the mouse was a new thing in 1984 as the first Apple MacIntosh computers just shipped that year). Cameron could not assume that the audience would make the leap to his futuristic interpretation of an AI-enabled cyborg, so he showed the audience a 1984 computer interface to make certain they got the connection. All this stuff in the media about AI, IoT, machine learning, big data, blah, blah, blah is just the real world catching up to what James Cameron predicted would happen way back in 1984.

Today we are talking to our phone to have it dial our best friend. We are issuing verbal commands to our Alexa assistant to have it order pizza or play our favorite music. Our Nest thermostat is monitoring our habits, such as when we come and go, along with our preferences for ambient temperature in order to take actions regarding raising and lowering the temperature where we live. These common applications of AI would have been totally foreign and inconceivable to a movie audience in 1984. But James Cameron had a vision of what artificial intelligence could potentially accomplish in the future, and he did a really good job presenting that vision to the audience in a way that they could understand it. Let’s do a quick reset on some over-hyped terms – AI, IoT, and big data.

Artificial Intelligence – AI is just the trend toward computers ingesting more diverse data in more formats (i.e. images, audio, natural language, pressure, temperature, humidity, etc.) to enable analysis that leads to judgments and actions related to accomplishing a mission or objective. Because AI is more of a trend than a definitive end-state, AI can simply be classified as Hofstadter, a famous AI scientist, describes it – “AI is whatever hasn’t been done yet.” More accurately, AI is simply the leading edge of new capability for computers to operate more intelligently on a broader diversity of data.

Internet of Things – IoT is simply a trend where more and more things are connected to the Internet to send or receive data or to act upon data received. Historically connections to the Internet were people staring at screens (and increasingly listening to audio speakers) and entering data or responding to data received. “Things,” whether a cyborg like the Terminator or a $10 temperature sensor, don’t need screens (nor keyboards or a mouse or speakers) to send and receive data or to act upon data received.

Big Data – Big Data is simply the collection and analysis of data sets that are too large for humans to effectively parse, analyze, and extract intelligence from using simple programs like Excel. Ever cheaper storage and computing cycles lead to ever-increasing data collection, storage, and analysis. Again, big data is simply a trend and not a definitive end state.

Over time, computers will progress to read a broader spectrum of inputs, make more sophisticated judgments, and take an increasing variety of actions that lead to desired outcomes. No one was talking about AI in 1984 – no one in the mainstream media anyway – because the topic was confined to a small group of computer nerds at top technical institutions like Stanford and MIT. Yet the director of The Terminator could imagine a future where a computer becomes so powerful that it can measure its environment in a humanlike manner, make judgments based upon those measurements, and take intelligent actions to execute a mission – in this case, the termination of Sarah Connor. It is unlikely that anyone who saw The Terminator in 1984 remembers the on-screen effects that Cameron used to connect the audience to the idea that the Terminator was a computer. I bet everyone who saw the movie remembers the Terminator’s mission, however. What was the mission? To terminate Sarah Connor of course.

Whether an innovation can correctly be labeled as AI (or with any other overhyped term of the day) is far less important than whether the innovation helps accomplish the mission. The Terminator’s mission was to terminate Sarah Connor, and the Terminator was extremely well suited for carrying out the mission (although it actually failed in this case). Defining the mission that you would like to accomplish with AI, IoT, big data, etc. is actually much more important, in my humble opinion, than the actual technology you select to achieve the mission. Have you thought about the mission that you want to accomplish using technology?

I believe the mission you are generally attempting to accomplish through technology is to maximize customer equipment performance while eliminating equipment failures so that your customer experiences the least risk, expense, and disruption in their business. The reason that technology is important as an enabler of this mission is because it is generally cheaper and easier to manage (sometimes) than people. If you accomplish this mission, your customer will spend zero dollars recovering from disruptions (lost output, spoiled inventory, damaged property, emergency services) while maximizing the amount of money they spend with you relative to other suppliers.

I will continue this topic next week with real-world examples of how AI, IoT, and big data are being used by service contractors today. I’ll also have some advice to make sure that what you’re building is more like a high-performing Terminator than a cobbled-together Frankenstein monster.